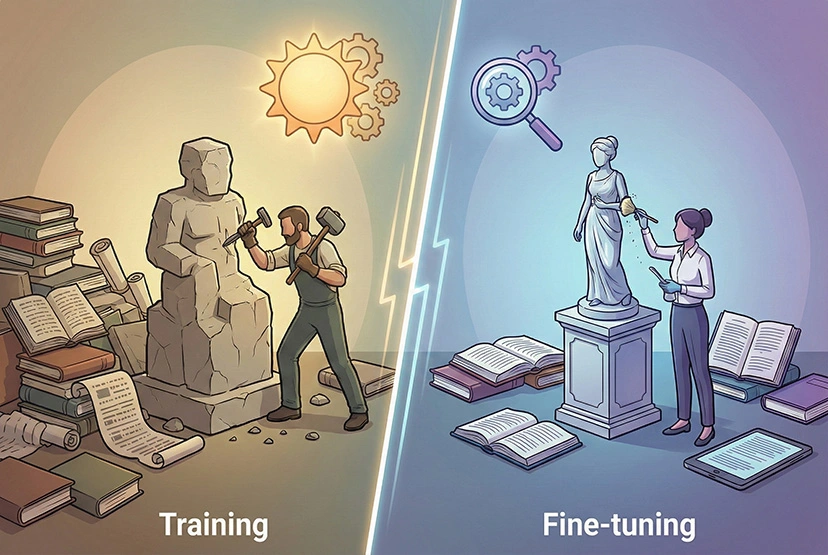

In the machine learning lifecycle, AI training and fine-tuning are two crucial yet distinct processes. Training builds a model’s core intelligence from the ground up, while fine-tuning adapts a pre-trained model for a specialized purpose. Understanding the technical and strategic differences between these approaches is essential for any business aiming to develop custom, high-performance AI solutions efficiently. This article will define both concepts, compare them directly, and provide clear guidance on when to choose each approach.

What is AI Training? Building a Model from the Ground Up

AI training is the foundational process of building a new model from scratch. This process begins with a neural network whose parameters, or weights, have not yet learned anything. While these weights can be initialized with random values, this can lead to slow convergence or vanishing gradients. To address this, sophisticated strategies like He or Xavier initialization are used to set starting weights based on network architecture, enabling faster and more stable learning.

The core objective is to teach the model to recognize patterns, relationships, and rules by feeding it vast amounts of data. The model learns by iteratively adjusting its parameters to minimize the discrepancy, or "loss," between its predictions and the correct outputs. This optimization is typically achieved using algorithms like back propagation and gradient descent. AI training requires large, diverse datasets and significant computational resources to build a generalized model that performs well on new, unseen data.

What is Fine-tuning? Adapting a Model for a Specialized Task

Fine-tuning is a deep learning technique that adapts a pre-trained model for a specific task or use case. It is a powerful form of transfer learning, leveraging the knowledge an existing model has already learned as a robust starting point. The intuition is simple: it’s easier and cheaper to hone the capabilities of a pre-trained base model than it is to train a new one from scratch.

Unlike training from scratch, fine-tuning begins with a model whose weights have already been optimized on a massive, general-purpose dataset. The goal is to tailor these capabilities for a new, often niche task using a smaller, task-specific dataset. For example, a general image processing model can be fine-tuned to identify bees in images. This process significantly reduces the time, computational cost, and data required compared to building a model from scratch.

Training vs. Fine-Tuning: A Head-to-Head Comparison

Making the Right Choice: When to Train vs. When to Fine-tune

Choosing the correct approach is a critical strategic decision that depends on your project's specific requirements, resources, and potential risks.

When to Choose AI Training

AI training is the ideal choice in the following scenarios:

- When you have access to a vast and unique dataset that is significantly different from those used for available pre-trained models.

- When no suitable pre-trained model exists for your specific task on platforms like TensorFlow Hub or Hugging Face Hub.

- When you need complete control over the model's architecture and want to avoid any biases inherited from pre-existing datasets.

Key Risks to Consider:

- Overfitting: If the dataset isn't large and varied enough, the model may fail to generalize.

- High computational costs: Requires expensive GPU/TPU infrastructure and substantial energy consumption.

- Time and resource intensity: Extended training periods translate to higher operational costs and longer time-to-market.

- Data requirements: Demands vast, high-quality datasets that may be costly to acquire or label.

When to Choose Fine-tuning

Fine-tuning a pre-trained model is often the more practical and efficient option in the following scenarios:

- When you have limited time, computational resources, or a smaller, task-specific dataset.

- When your new task is similar to the original task the pre-trained model was built for.

- When you want to leverage the powerful capabilities of existing foundation models to achieve high accuracy quickly.

- When cost-efficiency is a priority, fine-tuning requires significantly fewer computational resources than AI training.

Key Risks to Consider:

- Bias propagation: Biases from the original pre-trained model can be carried over or even amplified in your fine-tuned model.

- Catastrophic forgetting: The model may lose some of its core, generalized knowledge while adapting to the new task, particularly if fine-tuning is too aggressive or the learning rate is too high.

- Overfitting: If your fine-tuning dataset is small, the model may overfit to those specific examples and lose its ability to generalize.

- License and usage restrictions: Pre-trained models may have licensing limitations regarding commercial use or modifications.