Standard Large Language Models (LLMs) operate with significant constraints: their knowledge is static, limited to the information available up to their last training update. This architecture means they cannot access current events or proprietary business data, often leading to outdated or generic responses. Moreover, without access to verified facts, these models are prone to hallucinations, generating plausible-sounding but incorrect information with high confidence.

To resolve these challenges, developers implement Retrieval-Augmented Generation (RAG). This architecture transforms the AI from a closed system into a dynamic tool by connecting it to external, authoritative knowledge bases. This technique grounds the model's responses in real-time, verifiable data, making generative AI a more reliable and trustworthy tool.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation is an AI architecture that optimizes the performance of LLMs by retrieving relevant information from an external, authoritative knowledge base before generating a response. Instead of relying solely on its static training data, the model is given access to current, verifiable facts, which increases the accuracy of its answers.

While the concept of combining retrieval with generation has roots in early information retrieval systems, the modern RAG architecture was introduced by researchers at Facebook AI Research in 2020 as a significant advance. By using machine learning to integrate retrieval and generation modules, RAG allows an AI to move beyond guessing and begin referencing real, factual data, making its outputs significantly more useful for enterprise-grade applications.

How Does RAG Work? A Step-by-Step Process

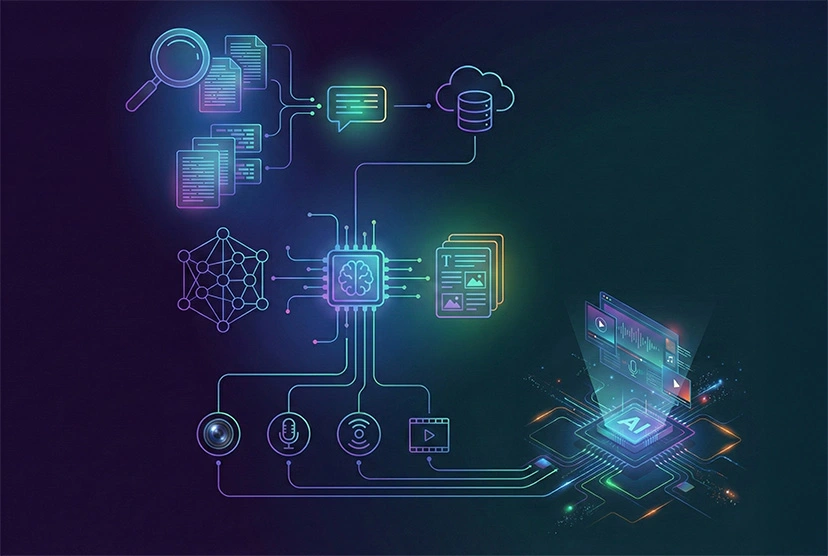

The RAG workflow combines an information retrieval component with a generative model. The process can be broken down into four key steps:

- Data Preparation and Indexing: First, external knowledge sources (such as documents, reports, or web pages) are processed. This data is often broken down into smaller, manageable "chunks" to ensure that the embeddings do not overwhelm the LLM's context window. These chunks are then converted into numerical representations, called embeddings, and stored in a specialized vector database. This creates a searchable knowledge library optimized for semantic vector search.

- User Query & Retrieval: When a user submits a query, it is also converted into a vector. The RAG system then searches the vector database to find and retrieve the information chunks that are most semantically similar or relevant to the user's query.

- Prompt Augmentation: The system then combines the original user prompt with the retrieved information. This creates an "augmented prompt" that provides the LLM with rich, factual context directly related to the user's question.

- Content Generation: Finally, this augmented prompt is sent to the LLM. The model uses the new context from the retrieved data to generate a final response that is more accurate, relevant, and grounded in the provided facts.

Core Benefits of RAG for Businesses

Implementing RAG offers several significant advantages for organizations and developers working with generative AI.

- Access to Current and Domain-Specific Data: RAG allows LLMs to use up-to-date information and proprietary internal knowledge without undergoing costly and time-consuming retraining.

- Reduced Risk of "Hallucinations": By grounding responses in factual, retrieved data, RAG significantly reduces the likelihood of the model generating incorrect or fabricated information.

- Increased Trust and Transparency: RAG systems can be designed to return source citations alongside generated answers. This allows users to verify the information and consult the source documents for follow-up clarification, which builds trust and confidence in the AI system.

- Cost-Effective and Scalable: Compared to the high computational and financial cost of continuously retraining a foundation model, RAG is a more efficient approach. Organizations can keep the model's knowledge current simply by updating the external data sources.

- Enhanced Data Privacy and Security: Unlike fine-tuning, RAG ensures your proprietary data is never exposed to the public model's training set. Sensitive information remains within your secure infrastructure (whether on-premise or in a private cloud).

- Improved Topic Control and Relevance: RAG systems can be configured with filters and guardrails to prevent responses to questions outside their intended domain. This ensures the AI stays focused on relevant topics and refuses to answer off-topic queries, maintaining system reliability and user experience.

The Next Frontier: Multimodal RAG

As RAG technology matures, its next evolution is the integration of multiple data types beyond text. This advanced architecture is known as Multimodal RAG.

What is Multimodal RAG?

The need for multimodality is driven by a simple fact: enterprise unstructured data is often spread across multiple formats, from high-resolution images to PDFs containing a mix of text, tables, and charts. Multimodal RAG expands the capabilities of traditional RAG by incorporating and processing these diverse data types, including images, charts, tables, audio, and video files. A multimodal system can draw insights from various forms of information to generate more comprehensive and contextually accurate responses.

Key Approaches to Building a Multimodal RAG Pipeline

There are several strategies for implementing a Multimodal RAG system, each with its own trade-offs.

Grounding in a Primary Modality (Text-Translation): This approach involves converting all non-text data into text. For instance, images are described with text captions using models like KOSMOS-2, charts are linearized into tables using models like DePlot, and audio files are transcribed. This method simplifies integration but creates an "information bottleneck," as critical nuance from the original modality can be lost in translation.

Embedding in a Shared Vector Space: A more advanced technique uses models like CLIP to encode different data types, such as text and images, into a common vector space. This allows for direct cross-modal retrieval, where a text query can retrieve a relevant image. A key advantage is that this approach can largely use the same text-only RAG infrastructure by simply swapping the embedding model for a multimodal one.

Separate Stores with a Re-Ranker: This strategy maintains separate databases for different modalities. When a query is made, the system searches all relevant stores and then uses a dedicated multimodal re-ranker to identify and prioritize the most relevant pieces of information. This adds complexity, as the re-ranker must arrange the top-M * N chunks (N each from M modalities) before sending them to the generator.

Challenges of Multimodal RAG

While powerful, Multimodal RAG presents several challenges that developers must address.

- Computational Complexity: Integrating multiple data types increases the computational load of the system, which can lead to slower inference times and higher operational costs.

- Data Alignment: Ensuring that embeddings from different modalities align correctly is a significant challenge. For instance, you must make sure that the semantic representation of a chart aligns with the semantic representation of the text discussing that same chart to enable accurate retrieval.

- Evaluation Metrics: Most existing benchmarks for AI performance are text-based, making it difficult to properly evaluate the quality of multimodal grounding and reasoning.

- Data Availability and Quality: High-quality, domain-specific multimodal datasets are often scarce, expensive, and difficult to create, which can be a bottleneck for training and implementation.

The Future of AI is Grounded and Multimodal

RAG makes generative AI more useful in real business settings by grounding answers in your own, up-to-date data instead of relying only on a static model. Multimodal RAG goes a step further, allowing you to work with text, charts, images, and other formats in one coherent workflow. For companies that want to move in this direction, Codya’s Custom AI/ML Solutions service helps design and implement RAG and Multimodal RAG solutions that fit existing systems and data landscape.